Isaac Asimov’s “Three Laws of Robotics”

- A robot may not

injure a human being or, through inaction, allow a human being to come to

harm. - A robot must obey

orders given it by human beings except where such orders would conflict

with the First Law. - A robot must protect

its own existence as long as such protection does not conflict with the

First or Second Law.[1]

Conversations about driverless cars just got a little more

difficult. Isaac Asimov’s “Three Laws of Robotics” cannot help

robotic cars in situations where they must choose between harm to passengers or harm to

pedestrians. A recent article[2]

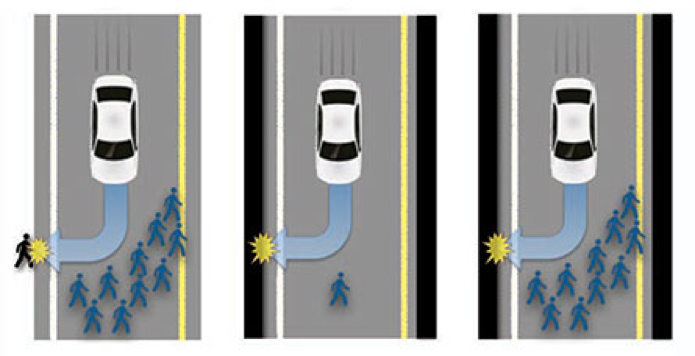

in Science News includes the

following graphic and explanation to illustrate the point.

difficult. Isaac Asimov’s “Three Laws of Robotics” cannot help

robotic cars in situations where they must choose between harm to passengers or harm to

pedestrians. A recent article[2]

in Science News includes the

following graphic and explanation to illustrate the point.

J.-F.Bonnefon et al/Science 2016

“Driverless cars will occasionally face emergency situations.

The car may have to determine whether to swerve into one passerby to avoid

several pedestrians (left), swerve away from a pedestrian while harming its own

passenger (middle), or swerve away from several pedestrians while harming its

own passenger (right). Online surveys indicate most people want driverless cars

that save passengers at all costs, even if passenger-sacrificing vehicles save

more lives.”

The car may have to determine whether to swerve into one passerby to avoid

several pedestrians (left), swerve away from a pedestrian while harming its own

passenger (middle), or swerve away from several pedestrians while harming its

own passenger (right). Online surveys indicate most people want driverless cars

that save passengers at all costs, even if passenger-sacrificing vehicles save

more lives.”

Of course one might argue that, on average, humans are no

better at such moral dilemmas. Perhaps the issue is that with a robotic car we

know exactly what we will get. Programming will determine the choices a

driverless car will make in any given situation, whereas humans are much less

predictable and the choices they make are dependent upon the degree of altruism resident in the individual

driver. Many times, in a moral dilemma, we do not know how an individual human

will respond. Will they choose to sacrifice themselves for the sake of

strangers? Will they sacrifice themselves and their child for the strangers?

What about the scenario in which their pet would be sacrificed for the sake of

unknown pedestrians? We may do surveys and get a statistically accurate average

on the answers to these questions but we know that there would be outliers and

unique decisions made at the spur of the moment. With robotic programming, the

driverless car does not make a choice, it simply follows the program with which

it was built. This puts the onus on the manufacturer rather than a driver or a

car or a robotic brain. In a litigious society, this may be the problem that

slows the progress of driverless cars.

better at such moral dilemmas. Perhaps the issue is that with a robotic car we

know exactly what we will get. Programming will determine the choices a

driverless car will make in any given situation, whereas humans are much less

predictable and the choices they make are dependent upon the degree of altruism resident in the individual

driver. Many times, in a moral dilemma, we do not know how an individual human

will respond. Will they choose to sacrifice themselves for the sake of

strangers? Will they sacrifice themselves and their child for the strangers?

What about the scenario in which their pet would be sacrificed for the sake of

unknown pedestrians? We may do surveys and get a statistically accurate average

on the answers to these questions but we know that there would be outliers and

unique decisions made at the spur of the moment. With robotic programming, the

driverless car does not make a choice, it simply follows the program with which

it was built. This puts the onus on the manufacturer rather than a driver or a

car or a robotic brain. In a litigious society, this may be the problem that

slows the progress of driverless cars.

[1] In 1942, the science fiction author Isaac Asimov published a short

story called “Runaround” in which he introduced three laws that governed

the behaviour of robots. https://en.wikipedia.org/wiki/Runaround_(story)

story called “Runaround” in which he introduced three laws that governed

the behaviour of robots. https://en.wikipedia.org/wiki/Runaround_(story)

[2] “Moral Dilemma Could Put Brakes on Driverless Cars,” Bruce Bower,

June 23, 2016, Science News, https://www.sciencenews.org/article/moral-dilemma-could-put-brakes-driverless-cars

June 23, 2016, Science News, https://www.sciencenews.org/article/moral-dilemma-could-put-brakes-driverless-cars