(Click on thumbnails for larger image)

A recent pedestrian fatality

involving an autonomous car is simply the first such tragedy. Science News

reported that a woman died crossing the street when a

self-driving car, deployed by Uber, hit her.[1]

Writers, engineers, and computer science experts had predicted this for some

time. What will this mean specifically for the autonomous car industry and

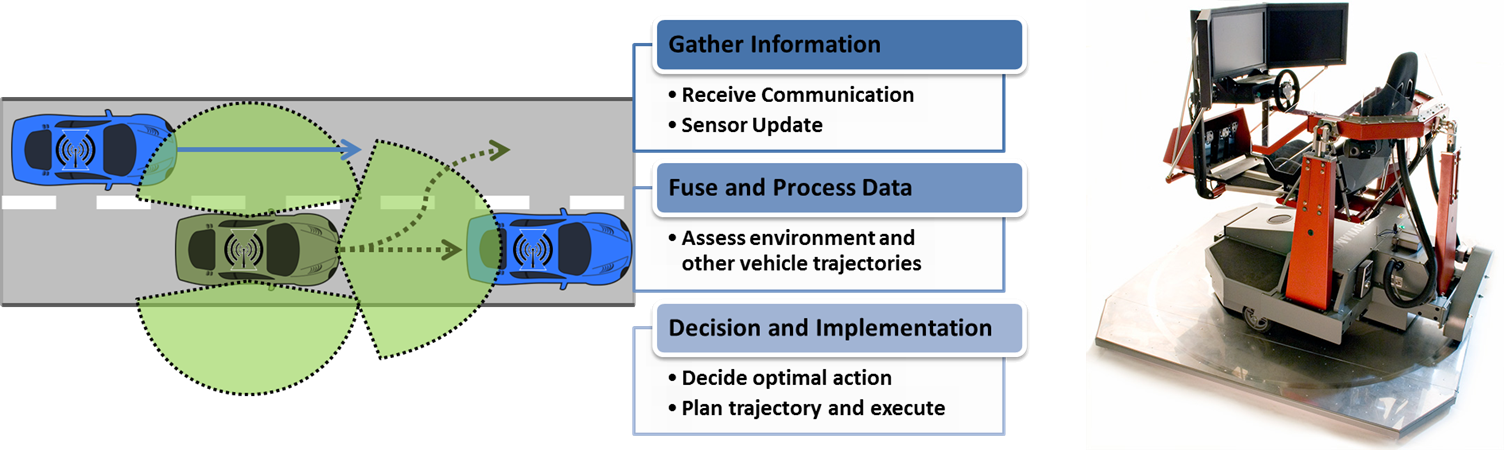

artificial intelligence in general? What algorithms, if any, need to be

rewritten? What logic did the self-driving car’s onboard computers use in

choosing to enter the area when a human being was also in the street?

involving an autonomous car is simply the first such tragedy. Science News

reported that a woman died crossing the street when a

self-driving car, deployed by Uber, hit her.[1]

Writers, engineers, and computer science experts had predicted this for some

time. What will this mean specifically for the autonomous car industry and

artificial intelligence in general? What algorithms, if any, need to be

rewritten? What logic did the self-driving car’s onboard computers use in

choosing to enter the area when a human being was also in the street?

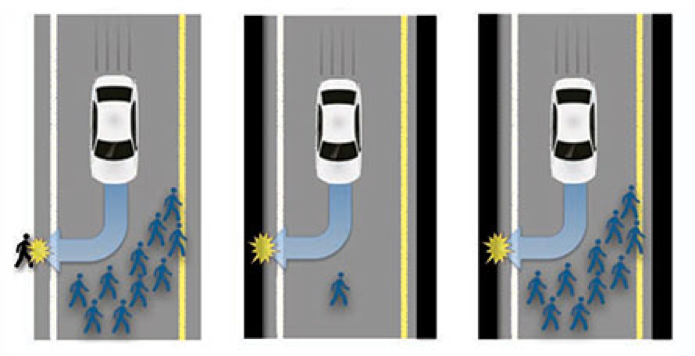

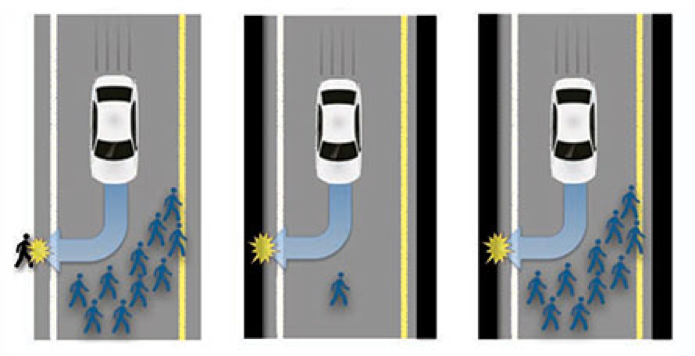

I have previously discussed this possibility and its implications in other posts.[2] A number of questions now come to mind. Did the car make a

decisive choice to hit the pedestrian to avoid harm to those in the car? Could

the onboard AI have made a different choice? How could the outcome have been changed?

Investigators will need to ask these and several more questions to get to a place of

assigning responsibility for this accident. What will the law have to say? Who

could be fined? What sort of lawsuit could be filed? We await the outcome to

see how manufacturers of autonomous cars might learn from this incident and the

ramifications for all car makers.

decisive choice to hit the pedestrian to avoid harm to those in the car? Could

the onboard AI have made a different choice? How could the outcome have been changed?

Investigators will need to ask these and several more questions to get to a place of

assigning responsibility for this accident. What will the law have to say? Who

could be fined? What sort of lawsuit could be filed? We await the outcome to

see how manufacturers of autonomous cars might learn from this incident and the

ramifications for all car makers.